What I wish I knew about Python when I started

Seven years ago, I quit my job working for a startup and joined a new company that did all their development in Python. I had never used the Python language to any serious degree up until that point, but quickly learned to love the language for its intuitive nature and broad community support. At the same time, it often felt a bit like the wild west as there seemed to exist at least ten different ways to accomplish any one task, and no obvious consensus on which one was right.

Since then, through a combination of learning best practices from peers and colleagues and gaining firsthand experience through trial and error, I’ve developed a set of choices I now make—ones I only wish I had known about back then. Some of these ideas didn’t even exist seven years ago (like PEP-621), so implementing them back then would have been impossible. But now, I’m sharing them here for anyone in a similar position. Keep in mind that the Python ecosystem evolves quickly, so it’s entirely possible much of the advice here may become obsolete within a year. And of course, you may not agree with all my recommendations—and that’s okay! Feel free to debate (or roast) me in the comments.

I’ll be breaking this down into seven sections, so let’s dive in.

Folder structure and basics

The first thing I want to bring up probably seems small and

inconsequential, but it was definitely something that tripped me up a

few times when I first started. When starting a new project, I would

naturally create a folder to store all my files, something like

myproject. This folder would invariably be the root of a Git

repository. I recommend avoiding storing Python files directly in the

root of this folder. Instead, create a subfolder within myproject

also named myproject and store your Python code there.

Alternatively, you might create a subfolder called src for the code,

but if you plan on using any of your new Python files as packages,

you’ll probably still need another subfolder inside src called

myproject. I will still include other files at the root of the

repository—just not Python files. Here are some examples of other

files that might live at the root:

DockerfileJustfile/Makefilepyproject.toml(more on this later)- Other config files

Now keep in mind that rules are made to be broken, so there are

exceptions to this. My baseline rule is to always avoid placing Python

files directly at the root of a new repository when setting it up. Why,

you might ask? Because the folder name of your repository will always

be effectively invisible to the Python importer—it needs a subfolder

in order to import things by name. So, suppose I have two files in my

repository: an executable script called main.py and a utility class

called utils.py from which I want to import a function. If I threw

everything in the root of the repository, the folder structure would

look something like this:

.

├── main.py

├── utils.py

└── README.md

…and then my main function would look something like this:

#!/usr/bin/env python

from myproject.utils import my_function

if __name__ == "__main__":

my_function()

Well, then it simply wouldn’t work, would it? Although the root

myproject folder is indeed part of the PYTHONPATH by running it inside

that folder, the package name myproject still isn’t available unless—and

until—we have that extra nested folder, so it should look more like this structure:

.

├── README.md

└── myproject

├── main.py

└── utils.py

Again, I recognize this probably seems small and inconsequential, but

coming from a non-Pythonic background, I remember it felt weird at

first. Just accept that this is the convention. And while we’re talking

about basics, there are a few other things worth mentioning. You may have

noticed that the first line of my sample Python script above included a

shebang. It’s standard practice to include this only in files meant to be

executed directly. Furthermore, I used /usr/bin/env python in my shebang,

but in the wild you might come across /usr/bin/python or /usr/bin/local/python.

I recommend sticking with /usr/bin/env python, as it’s the preferred

approach outlined in PEP-394.

Finally, whatever file launches your application (in this case, mine is main.py),

it is conventional to include that if __name__ == "main": block at the bottom

(shown above), to specify the entrypoint to your application. This isn’t strictly

necessary in many use cases—for instance, if you’re writing a REST API using

FastAPI and launching it via

uvicorn, you don’t need this block. But it

also doesn’t hurt to have it regardless. It’s a helpful reminder of where

things begin.

One critical piece of learning I want to share is that, when

creating files, do your best not to name them with the same names as

Python system packages. If you do, it will invetiably cause import

issues. I remember burning more time than I would care to admit,

scratching my head over why one of my scripts wasn’t running, all

because I had created a file named copy.py or email.py (which

conflict with the copy

and email system

packages, respectively). So, watch out for that!

Additionally, I sometimes create repositories that contain more than just Python code, in which case I further segment the folder structure. This goes beyond simply including template files alongside my Python code—I mean entirely separate projects within the same repository. This approach is often referred to as building a monorepo, where a single repository houses multiple projects. The most common structure I use is really more of a pseudo-monorepo containing two different projects: a backend and a frontend application. In many cases, my frontend application doesn’t include any Python at all, as JavaScript frameworks often make more sense. In such cases, my folder structure might look more like this:

.

├── README.md

├── backend

│ ├── myproject

│ │ ├── main.py

│ │ └── util.py

│ └── pyproject.toml

└── frontend

└── package.json

Sometimes when writing applications in Python (or any language, really),

you may find yourself writing common utility functions that aren’t specific

to any one application. These might include functions for database connections,

common data transformations, or reusable subclasses for workflow

orchestration. In such cases, I recommend utilizing the earlier

folder structure, where I create a folder called myproject at the root

of the repository. To keep things organized, I also recommend making use of

the __all__ dunder variable to cleanly define what utilities are exported.

To demonstrate this, I created a sample repository called rds-utils,

which I reference later when we discuss publishing packages. If you peruse

that repository, you’ll see how I have things structured for this library.

Note the package itself is called rds-utils with a hyphen, but the root-level

project folder is named rds_utils with an underscore. This is intentional.

When writing import statements in Python, you cannot use hyphens, though it

is relatively common to see hyphens used in the package names themselves.

So with this repo, you would run pip install rds-utils but then use

something like from rds_utils import fetch_query in your scripts.

I also use the __all__ special variable in my __init__.py

file.

Generally, I prefer to leave most of my __init__.py files

completely blank (or omit them entirely), but defining the __all__

variable within them is my one exception. This essentially

allows you to create a cleaner interface when building shared libraries

so that consumers of your library don’t have to worry about internal

package structures. The way I have it set up right now,

it’s still possible to directly import things like:

from rds_utils.utils import fetch_query, but it’s much nicer

that consumers don’t need to know about that extra .utils that

I have defined there.

Virtual environments and dependency management

In the seven years I’ve been working with Python, the landscape has changed dramatically. It feels especially true now, as the Python community reaps the benefits of what you might call the “Rust Renaissance.” So although it still seems very nascent, I want to focus on a tool called uv which I’ve recently adopted and has streamlined my workflow by replacing three other tools I previously relied on. While uv helps manage virtual environments, it does a whole lot more. It also helps manage Python versions as well as project dependencies and can even be used to create packages.

When I first started writing this post, I had planned on getting into the nuances of pyenv, pdm, pipx—all of which I had been using. Just last year, I thought this blog post comparing Python package managers was still an insightful guide, but now it already seems outdated! While tools like pyenv and pdm still have advantages over uv in certain areas, those differences are minor, and I’m increasingly confident that uv will iron out any of my grievances as they continue to move forward with development.

But let me back up and start from the perspective of a total Python

beginner, as that is who this post is intended for. In Python, there are

a lot of built-in libraries available to you via the Python Standard

Library. This includes

packages like datetime which allows you to manipulate dates and times,

or like smtplib which allows you to send emails, or like argparse

which helps aid development of command line utilities, and so on. In

addition, Python also has third-party libraries available through

PyPI, the Python package index. You don’t need to

do anything special to utilize any of the Python Standard Library

packages besides using an import statement in your Python script

to reference them. But with PyPI packages you typically use

pip install to first add them into your system, then reference

them with the import keyword.

Pip is a command line package manager that comes with Python, but it

is definitely not the only package manager. Other tools like pipenv,

poetry, pdm, conda, and even some third-party applications like

please can all manage Python dependencies.

One common mistake beginners make is installing Python, and then

running pip install (or even worse, sudo pip install), before

moving forward. While this works in the moment, it will almost

certainly cause headaches for you later.

In Python, you cannot have multiple versions of the same package

installed at the same time. A problem arises when one piece of Python

code depends upon, say, version 1 of Pydantic, while another depends

on version 2. Or maybe one package depends on an older version of

scikit-learn, but another needs the newer version, and so on—these

are called dependency conflicts. To solve this problem, Python has a

concept called virtual environments. These are simply an isolated

context in which you can install a specific set of Python packages,

unique to one application. So, if you have code in a folder named

myproject1 and some other code in a folder called myproject2,

you can have separate virtual environments for each.

It’s for this reason, I recommend never installing any Python packages into your global environment at all. Only ever install into a virtual environment. Prior to recent updates to uv, I would have recommended installing pyenv first as a way of managing different versions of Python, and then using its built-in extension pyenv-virtualenv to manage your virtual environment. However, as I teased earlier, uv now handles all of this. With that foundation in place, let’s see why uv is my go-to tool.

To begin, I recommend installing uv first, even before installing Python, via this command:

curl -LsSf https://astral.sh/uv/install.sh | sh

Once uv is installed, you can use it to install the latest version of Python, via this command (as of version 3.13):

uv python install 3.13

That will download the specified version of Python to a subfolder under

~/.local/share/uv/python but it won’t be immediately available. You

can now type uv run python to launch this instance of Python, or

uv run --python 3.13 python if you want to specify the version.

Lately, I’ve been adding the following aliases to my .bashrc or .zshrc file:

alias python="uv run python"

alias pip="uv pip"

This allows me to simply type python on the command line, and it

effectively calls that same uv run python command to launch the Python

REPL. Or I can type pip list, and it will call uv pip list instead.

So at this point, we have uv managing our different versions of Python

(because inevitably you will need to update both), but we are still

operating within the global environment for that version of Python.

As I mentioned before, we want to utilize virtual environments as a

means of segregating dependencies for different projects.

Even when using uv, there are multiple ways of going about this. You

could type uv venv which will create a folder named .venv, then run

source .venv/bin/activate to activate it, and then run

uv pip install (or just pip install with the alias I mentioned

earlier) to install dependencies into it. You could do that, but that’s

not what I recommend. If you’re brand new to virtual environments, you

might not realize they are something you have to “activate” and

“deactivate.” But before I suggest a better alternative, I need to

introduce one more concept: Python packaging.

Virtual environments solve one problem: conflicting dependencies across different projects. But they don’t solve an accompanying problem, which is what to do when you’ve created a project and need to package it to share with others. Virtual environments live on your computer and provide an isolated space to install dependencies, but if you want to publish your project to the Python Package Index, or if you want to install the project inside a Docker container, a separate process is involved as virtual environments aren’t portable.

Different tools that have sprung up over the years to solve this problem, some of which I already mentioned. Pip uses a requirements.txt with a list of each dependency on every line. The related pip-tools package uses a requirements.in file, which barely abstracts that a little further. Pipenv uses a TOML-formatted file named Pipfile to define much of the same, while Anaconda and Conda use an environment.yml file. The setuptools package defines a Python script named setup.py to handle packaging and define dependencies. And tools like poetry and pdm use a pyproject.toml file.

That last one—pyproject.toml—was introduced in mid-2020 as part of PEP-621, and in my opinion is the best way to handle Python packaging to date. All the others are antiquated or incomplete by comparison, and fortunately, pyproject.toml is uv’s native format. Here’s an example of a pyproject.toml file generated by uv:

[project]

name = "my-email-application"

version = "0.1.0"

description = "Sends emails using SES"

readme = "README.md"

requires-python = ">=3.13"

dependencies = [

"boto3>=1.35.68",

"click>=8.1.7",

"tqdm>=4.67.1",

]

As you can see, it has a name, a version, a description, a reference to

a README.md file, the specific Python version required, and a list of

dependencies. I generated this file by first creating a project folder

named my-email-application and then running the following commands

from within that folder:

uv init

uv add boto3 click tqdm

In truth, I did have to manually update the pyproject.toml, which was

generated by that first uv init command, because it had placeholder text in

the description. But by calling the second command (uv add), not only

did it update the pyproject.toml file as shown above with the list of

dependencies, it created a .venv folder and installed those dependencies

into it. So now if I type uv pip list, I immediately see the following output:

Package Version

--------------- -----------

boto3 1.35.68

botocore 1.35.68

click 8.1.7

jmespath 1.0.1

python-dateutil 2.9.0.post0

s3transfer 0.10.4

six 1.16.0

tqdm 4.67.1

urllib3 2.2.3

Because these are installed into a virtual environment, I can trust

they won’t conflict with any other projects I might be working on.

My recommendation is to always start a project by running uv init,

which will create a pyproject.toml file (if one does not already exist).

However, if you happen to be working on a project that already has this

file, you’ll need to call the following two commands in order instead:

uv lock

uv sync

The first will use the existing pyproject.toml file to create another file called uv.lock, while the second will create a virtual environment and install all the dependencies into it.

Further, I recommend only installing dependencies using uv add,

instead of something like uv pip install. Using uv add will

not only install dependencies into your virtual environment, but will

also update your pyproject.toml and uv.lock files. This means you

don’t have to treat dependency installation and packaging as two

separate things.

Running Python on Windows

If you are running Microsoft Windows, I want to advise one more prerequisite step that you need to take before getting started with Python or uv: install the Windows Subsystem for Linux, also known as WSL2. Do not, for the love of all that is good and holy, install Python tooling directly in Windows; rather, install WSL first. This guide outlines all the steps you need to take to get started, though I recommend downloading WSL from the Releases page on Github instead of from the Microsoft Store as advised in Step 3.

WSL transforms the Windows command prompt into a Linux terminal, along with its own Linux-based filesystem in a cordoned off part of your hard drive. The files there are still accessible through programs like VS Code, via their WSL extension. Installing WSL does mean you will need to learn Linux syntax, but it will be worth it. So for Windows users, install WSL first, then install uv.

A note on tools

It’s also worth mentioning that there are some utility packages

offering helpful tools that I like to make globally available.

This approach should be used sparingly—since most of the time you

will want to install project dependencies as I’ve described above

using uv add—there are certain tools that cover cross-cutting

concerns and don’t properly belong to any one project you’re writing.

An example of a cross-cutting concern would be something like code formatting, or CLI utilities helpful during development but not properly part of the codebase itself. There are only four tools I use in particular, but I’ll list some of the more popular examples:

- black - a Python code formatter

- flake8 - a Python code formatter

- lefthook - a Git hooks manager

- isort - a utility for sorting import statements

- mypy - a static typing utility for Python

- nbstripout - a utility that strips output from Jupyter notebooks

- pdm - another package manager

- poetry - another package manager

- pre-commit - a Git hooks manager

- pylint - a static code checker for Python

- ruff - a Python code formatter

- rust-just - a modern command runner, similar to Make

- uv-sort - a utility for alphabetizing dependencies in pyproject.toml

These days, I only keep lefthook, mypy, ruff, and rust-just installed.

I’ve stopped using pdm in favor of uv, and I’ve stopped using tools like

black and isort in favor of ruff. In the past, I might have installed

these utilities with a command like pip install black or pipx install pdm.

But with uv, the equivalent command is uv tool install followed by the

name of the tool. This makes the exectuable available globally regardless

of whether you’re in a virtual environment. You can also run

uv tool upgrade periodically to make sure you’re using the latest version.

I use three criteria to determine whether to install a tool via

uv tool install or the usual uv add:

- The tool must primarily provide a binary application (not a library)

- The tool must address a cross-cutting concern (such as code formatting)

- The tool must not be something you would otherwise bundle with an application itself

With that said, there is still one tool that covers cross-cutting concerns, and I recommend packaging the normal way with the application: pytest. Pytest is used for running unit tests within your project, so it should be added as a development dependency. Add it with a command like this:

uv add --dev pytest

There are other development dependencies you might consider adding (like coverage), but in general I tend to install things as tools rather than dev dependencies. It really depends on the use case.

Publishing packages

uv makes it remarkably easy to publish shared libraries and other utilities you’ve written in Python as packages—either on the public PyPI repository or in private artifact registries (e.g. Gitlab Artifacts, AWS CodeArtifact, Google Artifact Registry, Artifactory, SonaType Nexus, etc.). You only need to make minor modifications to your pyproject.toml file to support publishing, and then set some environment variables. I’ll reference my rds-utils package I highlighted earlier to illustrate how this works. As you can see from that project’s prpyroject.toml file, I have added two blocks that aren’t there by default:

[project.urls][tool.uv]

The list of project.urls is not strictly required, but when publishing specifically to PyPI it means the website that PyPI auto-generates for your package will link back to your repository. I went ahead and published this library to PyPI here. You can see the left navigation bar lists the three “Project Links.” The tool.uv section defines the publish URL, which in this case is PyPI’s upload endpoint. If you’re are using a private registry such as AWS CodeArtifact, you can swap that out here. For instance, a CodeArtifact repository URL might look something like this:

[tool.uv]

publish-url = "https://registry-name-123456789012.d.codeartifact.us-east-1.amazonaws.com/pypi/artifacts/"

Note: Authentication for the upload endpoint is not defined here, nor should it be. Usernames, passwords, and/or tokens should never be hard-coded into a file.

In the case of a private registry, you may be given an actual

username and password. In the case of the public PyPI registry, you need

to generate an API token, and that token effectively becomes the

password while the literal string __token__ is the username. To use uv

for publishing, you need to set the values of these environment

variables:

- UV_PUBLISH_USERNAME

- UV_PUBLISH_PASSWORD

With those values set, you then need to run the following two commands in order:

uv build

uv publish

And voila! Assuming you’ve specified the right credentials, you should now have a published Python package in your choice repository. In the past, this might have involved defining a setup.py file at the root of your repository, and using utility packages such as setuptools, wheel, and twine to build and upload everything, but now uv serves as a complete replacement for all of those.

Installing libraries from private repositories

If you built your shared library package and published it to the public

PyPI repository (as I did with my rds-utils package), you don’t have to

do anything special to utilize it in future projects—you can simply use

pip install rds-utils or uv add rds-utils. But if you pushed your

code to a private repository (common when developing commercial

applications for private companies), you’ll have to do a little bit

more to tell uv (or pip) where to pull your package from and how to authenticate.

I ran a quick experiment by creating a private CodeArtifact repository

in my personal AWS account and installed my same rds-utils package

using the steps described above. Then I created a second project in

which I wanted to install that library, again using uv init to create

my pyproject.toml file. This time, I needed to manually add another section

called [[tool.uv.index]] to that file. In my case, it looked

like this:

[[tool.uv.index]]

name = "codeartifact"

url = "https://registry-name-123456789012.d.codeartifact.us-east-1.amazonaws.com/pypi/artifacts/simple/"

You can add as many of these sections to your pyproject.toml file as you want, but it’s unlikely you’ll need more than one at a time. This is because a single private registry can host as many different Python packages as you like. Even Gitlab’s project-based, built-in artifact registries still have a mechanism for pulling things at the group level, thereby allowing better consolidation.

Again, it’s critical to omit any authentication information from the URL. With publishing to CodeArtifact, it’s easy as the AWS credentials provided are already delineated into a separate URL, username, and password. But when fetching from CodeArtifact, AWS will present you with a message like this:

Use

pip configto set the CodeArtifact registry URL and credentials. The following command will update the system-wide configuration file. To update the current environment configuration file only, replace global with site.pip config set global.index-url https://aws:$CODEARTIFACT_AUTH_TOKEN@registry-name-123456789012.d.codeartifact.us-east-1.amazonaws.com/pypi/artifacts/simple/

Notice that I’ve omitted the aws:$CODEARTIFACT_AUTH_TOKEN@ portion

of the URL in my pyproject.toml entry. This is important, because uv

already has its own mechanisms for supplying credentials to package

indexes, which you should utilize instead. In this example, I can forego

setting the CODEARTIFACT_AUTH_TOKEN environment variable and instead set

the following two environment variables:

- UV_INDEX_CODEARTIFACT_USERNAME

- UV_INDEX_CODEARTIFACT_PASSWORD

Note that the “CODEARTIFACT” portion of those environment variables is

only that value because I happened to specify name = "codeartifact" in

the index definition in my pyproject.toml file. If I had set

name = "gitlab" then it would be UV_INDEX_GITLAB_USERNAME instead.

With CodeArtifact specifically, the username should be set to the

literal string “aws” while the password should be the token value

generated by the AWS CLI. With Gitlab, you would set the username to the

literal string __token__ while the password would be an access token

with the appropriate rights. Other registries will have different

conventions, but hopefully you get the picture.

Publishing Cloud Functions

In addition to packaging shared libraries for PyPI and other registries, Python scripts are often deployed as Cloud Functions, such as AWS Lambda, Azure Functions, and Google Cloud Functions. After getting familiar with uv, I’ve learned it can be used to facilitate the packaging of cloud functions. The methods are a bit obscure, so I think it’s worth explaining how to do it.

In the case of Google Cloud Functions and Azure Functions, you need to deploy an actual requirements.txt file along with your Python script(s). You can utilize the following command to generate a requirements.txt file for this purpose:

uv export --no-dev --format requirements-txt

I recommend using the uv export command instead of uv pip freeze,

because the latter does not exclude dev dependencies. uv export allows

you to utilize the benefits of dev dependencies in your codebase while

still ensuring what you package for deployment remains as slim as possible.

With AWS Lambda, things are a little bit different. Google and Azure take care of installing the dependencies for you on the server side, but with AWS, it is your responsibility to install the dependencies ahead of time. Moreover, there are two ways of packaging Python scripts for Lambda execution: you can either upload a ZIP file containing your scripts and dependencies, or you can build a Docker image with a very specific Python package included and push it to ECR.

In the former case, you can run commands like the following to build your deployable Lambda ZIP file:

uv pip install --target dist .

rm -rf $(basename $(pwd).egg-info) # cleanup

cp *.py dist # modify this if you have subfolders

cd dist

zip -r ../lambda.zip .

cd -

The first command will install any non-dev dependencies into the dist directory (you can name this whatever you want), then it will copy any Python files from the root directory of your repo into the same dist directory, and ZIP both the Python scripts and dependencies up into a deployable. Keep in mind that if you have created scripts inside subfolders, you might need to modify my line that copies .py files to the dist folder.

The other way AWS Lambda can package Python code is with a Docker

container. Lambda requires you include a special dependency

called awslambdaric, so when

creating a Lambda function package with uv you’ll need to add that as a

dependency via uv add awslambdaric. A Dockerfile for an AWS Lambda

file might look like this:

FROM ghcr.io/astral-sh/uv:python3.13-bookworm-slim

WORKDIR /opt

ENV UV_CACHE_DIR=/tmp/.cache/uv

COPY pyproject.toml /opt

COPY uv.lock /opt

RUN uv sync --no-dev

COPY . /opt

ENTRYPOINT ["uv", "run", "--no-dev", "python", "-m", "awslambdaric"]

CMD ["lambda_function.handler"]

The critical factors about this:

- We have to set the

UV_CACHE_DIRenvironment variable to something under/tmpbecause that’s the only writable folder when Lambda is invoked - We have to consistently use the

--no-devflag with both sync and run - We copy the pyproject.toml and uv.lock files before calling

uv sync - We copy the rest of the files after calling

uv sync - We have to set the entrypoint to call the awslambdaric package

In addition, I make use of a .dockerignore file (not shown here)

so that the command to COPY . /opt only copies in Python

files. My choice of /opt as the working directory is purely a

matter of preference. With a Dockerfile like this, you can build and

push the image into ECR and then deploy it as Lambda. But personally,

I prefer to avoid going the Docker container route because it precludes

you from being able to use Lambda’s code editor in the AWS console,

which I find is rather nice.

Logging

Coming into the Python world having done Java and C# development, I

really didn’t know the best practices for logging. And logging things

properly is so important. I was used to creating logger objects in

Spring Boot applications, using dependency injection to insert them

into various classes, or using a factory class to instantiate them as

class members. That second approach is actually closer to what I now

consider best practices for logging in Python—only instead of

passing around logger objects between classes and files, it’s actually

better to create a unique logger object within each file at the top,

and let functions within that file access it from the global namespace.

In other words, each file can look like this:

from logging import getLogger

logger = getLogger(__name__)

...

def my_function(my_parameter):

"""

Notice how this function doesn't accept the logger object as a parameter;

it simply grabs it from the global namespace by convention.

"""

...

logger.info("Log message", extra={"my_parameter": my_parameter})

...

Omitted from that sample code is the logger configuration—let’s talk about that. When I was starting out, I quickly realized the advantages of logging things in a JSON format rather than Python’s default format. When deploying web services to the cloud, it can be immensely helpful to have all your logs consistently formatted using JSON, because then monitoring platforms like Datadog can parse your log events into a helpful, collapsible tree structure, allowing more complex searches on your log events.

When I started out using Python, I assumed this meant I had to install some special package to handle the JSON logs for me, so I installed structlog, which is a very popular logging package. Little did I know, I didn’t actually need that at all! I spent a good amount of time implementing structlog in all of my team’s projects, only to then spend more time ripping it out again—after realizing that Python’s standard library was already more than capable of printing structured/JSON logs without the need for third-party packages.

This has been a perennial lesson for me in Python: yes, there probably

is a package on Github that does the thing you want, but you should

always check to see if the thing you want can simply be done with

just the standard library. In the case of JSON-formatted logs,

I’ll share some simplified code examples. First, let’s create a file

called loggers.py as follows:

import json

import traceback

from logging import Formatter, LogRecord, StreamHandler, getLevelName

from typing import Any

# skip natural LogRecord attributes

# https://docs.python.org/3/library/logging.html#logrecord-attributes

_RESERVED_ATTRS = frozenset(

k.lower()

for k in (

"args",

"asctime",

"created",

"exc_info",

"exc_text",

"filename",

"funcName",

"levelname",

"levelno",

"lineno",

"module",

"msecs",

"message",

"msg",

"name",

"pathname",

"process",

"processName",

"relativeCreated",

"stack_info",

"taskname",

"thread",

"threadName",

)

)

class SimpleJsonFormatter(Formatter):

def format(self, record: LogRecord) -> str:

super().format(record)

record_data = {k.lower(): v for k, v in vars(record).items()}

attributes = {k: v for k, v in record_data.items() if k not in _RESERVED_ATTRS}

payload = {

"Body": record_data.get("body") or record.getMessage(),

"Timestamp": int(record.created),

"SeverityText": getLevelName(record.levelno),

}

if record.exc_info:

attributes["exception.stacktrace"] = "".join(traceback.format_exception(*record.exc_info))

exc_val = record.exc_info[1]

if exc_val is not None:

attributes["exception.message"] = str(exc_val)

attributes["exception.type"] = exc_val.__class__.__name__ # type: ignore

payload["Attributes"] = attributes

return json.dumps(payload, default=str)

def get_log_config() -> dict[str, Any]:

return {

"version": 1,

"disable_existing_loggers": False,

"formatters": {

"json": {"()": SimpleJsonFormatter},

},

"handlers": {

"console": {

"()": StreamHandler,

"level": "DEBUG",

"formatter": "json",

"stream": "ext://sys.stdout"

}

},

"root": {

"level": "DEBUG",

"handlers": ["console"]

}

}

As you can see—within this file, we define a class called

SimpleJsonFormatter, which inherits from logging.Formatter, as well

as a function named get_log_config. Then, in the main.py file (or

whatever your entrypoint Python file is), you can utilize this class and

function like this:

from logging import getLogger

from logging.config import dictConfig

from loggers import get_log_config

logger = getLogger(__name__)

dictConfig(get_log_config())

Note that you only want to call the dictConfig function exactly once,

ideally right at the beginning of launching your application. The

get_log_config function will ensure that every log message is passed

through our SimpleJsonFormatter class and all log messages

will be printed as JSON objects. But best of all, you don’t need to rely

on third-party packages to achieve this! This can all be done with the

standard library logging package.

When I first tried out the structlog package, I thought a big advantage was that you could add in as many extra attributes as you wanted, like this:

structlog.info("Log message", key="value", id=123)

But that functionality isn’t unique to structlog at all. It can

be supported just the same using the standard library, via the extra

parameter. So that same code snippet turns into something like this:

logger.info("Log message", extra={"key": "value", "id": 123})

When this log statement gets passed to our SimpleJsonFormatter, it

comes in as an instance of the logging.LogRecord class, and whatever

values you include in the extra parameter get embedded as a part of

that LogRecord and then printed when we JSON encode it.

I have given a very simplified example of a log formatter here, but there are many more powerful things you can do. For example, with additional log filters, you can utilize the OpenTelemetry standard to integrate traces and more.

You can also rework my get_log_config Python function into YAML syntax

and use it directly with some ASGI servers. Here’s the equivalent YAML:

version: 1

disable_existing_loggers: false

formatters:

json:

(): SimpleJsonFormatter

handlers:

console:

class: logging.StreamHandler

level: DEBUG

formatter: json

stream: ext://sys.stdout

root:

level: DEBUG

handlers: [console]

So if you build an application with something like FastAPI and uvicorn, you can utilize this custom logging when launching the application, like this:

uvicorn api:api --host 0.0.0.0 --log-config logconfig.yaml

When doing things this way, there’s no need to explicitly call the

dictConfig function as uvicorn (or whatever other ASGI server you’re

using) will handle that for you.

Finding open-source software packages

As I’ve mentioned, the Python community can often leave you with the impression that it’s the wild, wild west out there. If you can think of some kind of application or library, chances are there are at least ten different versions of it already, all made by different people and all at various stages of development. The obvious example is the one I’ve already spoken about: package management. You’ve read why I think uv is the best tool for the job, but there’s also pip, pipx, poetry, pdm, pipenv, pip-tools, conda, anaconda, and more.

And if you take a look at web frameworks, there is FastAPI, Flask, Falcon, Django, and more. Or if you look at distributed computing and workflow orchestration, you might find packages like Airflow, Luigi, Dagster, or even pyspark. And the examples don’t end there! If you look at blog software, the official Python site showcases dozens upon dozens of different packages all relating to blogs, some of which haven’t seen updates in over a decade.

It can be incredibly confusing to a newcomer to Python to understand where to get started when it comes to identifying the right tooling to solve any given problem. I generally advocate for the same approach Google likes to see when they interview engineering candidates: namely, “has someone else already solved this problem?”

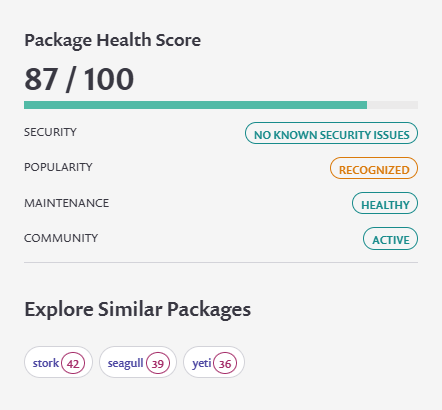

Unfortunately, there is no hard and fast rule to determine what the most appropriate software package or tool is for the problem you want to solve. The answer is almost always “it depends.” But I did discover a very powerful website that aids in the decision-making process: the Snyk Open Source Advisor. The Snyk Open Source Advisor lets you search for any public Python package published to PyPI and provides a package health score, which is a composite of four different metrics. Pictured below is an example package health score for the pelican library, which is the static site generator I use to create my blog.

The package health score ranks a number between 1 and 100, and the four metrics contributing to this score include Security, Popularity, Maintenance, and Community. These are immensely helpful—you certainly don’t want to utilize a package that has known security holes. It’s generally better to hone in on packages with a higher popularity score—though this becomes less true the more obscure your desired functionality is. The maintenance score is a quick way to determine if the project is still relevant and being updated, and the community score can help tell you how easy it would be to seek out support.

I use the Snyk Open Source Advisor all the time as a Python developer. It doesn’t completely solve the problem of determining which Python packages are the best tool for the job, but it sure helps make better informed decisions. There are some cases, of course, where you might deliberately choose a tool with a lower score than some other tool. Or you might find yourself comparing two frameworks that both serve the same function and have relatively high scores. And so experience is really the only critical factor there. But I have found 95% of the time I’ve been able to quickly determine the right package simply by looking at the Package Health score and nothing else.

Code formatting

Earlier I mentioned that I’ve been installing a few tools globally. Let’s focus specifically on three: ruff, lefthook, and mypy. ruff is a code formatting tool written by the same people who authored uv, and I absolutely love it. Previously, I had used a combination of black, pylint, isort, and others to handle code formatting, but I’ve found ruff is a sufficient replacement for them all.

Wikipedia has a whole article on indentation style, which describes different code conventions for how you might format your code in different C-based languages. For instance, you might use the Allman style:

while (x == y)

{

foo();

bar();

}

Or you might use the K&R style:

while (x == y) {

foo();

bar();

}

I’ve witnessed one developer use one style, only to have another developer change things in a pull request with more substantive changes. This muddies the waters during code reviews, sometimes dramatically, when legitimate code changes are hiding between hundreds of lines of whitespace changes. A similar argument can be had with regard to tabs vs. spaces. Of course Python doesn’t have this same problem with indentation because it enforces a single, consistent style as part of the syntax. However, there are other areas of code style where a consistent syntax is not enforced, and this is where tools like black or ruff come in.

In Python, you might have debates over things like line length. For instance, the following two snippets of code are functionally the same, though cosmetically different:

def load_metadata(toml_path: Path) -> dict[str, Any]:

metadata = toml.load(toml_path)

return {

"contact": {"name": metadata["project"]["authors"][0]["name"], "email": metadata["project"]["authors"][0]["email"]},

"description": metadata["project"]["description"],

"title": metadata["project"]["name"].title(),

"version": metadata["project"]["version"]

}

def load_metadata(toml_path: Path) -> dict[str, Any]:

metadata = toml.load(toml_path)

return {

"contact": {

"name": metadata["project"]["authors"][0]["name"],

"email": metadata["project"]["authors"][0]["email"],

},

"description": metadata["project"]["description"],

"title": metadata["project"]["name"].title(),

"version": metadata["project"]["version"],

}

Personally, I find the latter version easier to read, and in this case it’s the version you would get if you ran the code through a formatting tool like ruff. Though sometimes the tool will format the code in ways I wouldn’t necessarily have chosen—but the point of code formatters is it takes away your choice, which is actually a very good thing. This way, we can avoid the scenario where one developer decides to change the style and you end up with long, muddied pull requests.

Python encourages other rules, like import order, as part of PEP-8. Most people coming into Python for the first time don’t realize there is a recommended best practice when it comes to sorting import statements. In fact, it’s recommended that you split your imports into a maximum of three distinct groups:

- Standard library imports

- Related third-party imports

- Local application-specific imports

Each group is meant to be separated by a single empty line, and within

each group, package imports (starting the line with import) should

come first while specific import (starting with from) should come

second. Furthermore, each of these groups and sub-groups should be

sorted alphabetically, and any global imports

(e.g. from package import *) should be avoided altogether.

Beyond what PEP-8 recommends, I advise never, ever using relative imports. The standard practice concedes there are some complex scenarios when relative imports are OK, but I say there is practically no scenario that justifies the use of relative imports in Python. Unfortunately, ruff doesn’t yet have preconfigured rules to ban the use of relative imports altogether, so it’s incumbent on you to simply never use them.

What’s a relative import? Python allows you to write import statements like these:

import .package

from . import sibling

from ..pibling import thing

The problem with these is they are so tightly coupled to your

filesystem that they almost scream, “I’m going to break the moment you

try to share me!” If you prefix a package with a dot (.) like the

first statement above, it’s telling Python to find either a file named

package.py or a folder named package in the current directory. But this

isn’t necessary, because if you simply say import package

(without the dot) it will do the same thing! If you find yourself

needing to use the dot, it’s likely your package name conflicts with a

reserved keyword or something in Python’s standard library, in

which case you should just rename your package or file. Similarly, there’s

no need to use from . import sibling; import sibling works just fine.

Lastly, avoid using the double-dot to import something from outside your

directory. If you’re working with a separate module intended as a

shared library, it’s better to properly package it and install it into

your environment rather than summoning the unholy mess of the double-dot

import.

So with that out of the way, let’s see how I have ruff set up. As

mentioned earlier, I first installed the ruff utility with

uv tool install ruff. This makes it globally available. Then, I

created a configuration file at ~/.config/ruff/ruff.toml

which looks like this:

[lint]

extend-select = [

"RUF100", # disallow unused # noqa comments

"I001", # isort

]

This extends the default settings to include a couple of rules.

RUF100 disallows

unused # noqa comments, which was a helpful recommendation from a friend,

and I001 ensures

ruff automatically sorts imports per the PEP-8 standard.

In addition to these rules in my global configuration, I’ve also been copying them to each pyproject.toml file any time I start a new project. This ensures that the rules always run, but beyond adding them to pyproject.toml files, it also ensures that anyone else looking at my code for a given package also follows the same rules. The syntax is only slightly different inside the pyproject.toml file:

[tool.ruff]

lint.extend-select = [

"RUF100", # dissallow unused # noqa comments

"I001", # isort

]

Then you just need to run ruff format . and ruff check --fix . in

sequence to apply these changes to your codebase. (The first is called

formatting while the latter is called linting.) You can also make

these commands run automatically either by hooking them into

VS Code or using a pre-commit hook. I’ve opted to go with the

latter route, and I’ve specifically been using a library called

lefthook to accomplish this.

Lefthook is basically a faster version of pre-commit, written in Go. It allows you to define a list of rules to run before checking in your code to Git. If any of the rules fail, the commit will also fail, so you’re forced to deal with the failure before pushing your code. This setup involves creation of a lefthook.yml file in your repository. Here’s an example lefthook.yml file that I’ve been using in one of my repositories:

pre-commit:

commands:

uv-sort:

root: "backend/"

glob: "pyproject.toml"

run: uv-sort

python-lint:

root: "backend/"

glob: "*.py"

run: ruff check --fix {staged_files}

stage_fixed: true

python-format:

root: "backend/"

glob: "*.py"

run: ruff format {staged_files}

stage_fixed: true

nbstripout:

root: "backend/"

glob: "*.ipynb"

run: nbstripout {staged_files}

stage_fixed: true

js-lint:

root: "frontend/"

glob: "*.{js,mjs,cjs,ts,vue}"

run: npx eslint --fix {staged_files}

stage_fixed: true

js-format:

root: "frontend/"

glob: "*.{js,mjs,cjs,ts,vue}"

run: npx prettier --write {staged_files}

stage_fixed: true

In this example, I have six pre-commit commands defined and only four of them are Python-related. I’ve segmented my code into different folders for the backend API (written in Python) and the frontend application (written in React.js). This is a good illustratration of how you can segment your lefthook rules to operate only on specific directories (and files) within your codebase.

As you can see, the first command makes use of the uv-sort utility

previously installed via uv tool install. This command ensures

the dependencies in my pyproject.toml file are sorted. The next two

commands are the two ways of invoking ruff which I’ve already

explained. And then I also run npstripout to clean up any output from

Jupyter notebook files before committing them. The last two commands run

linting and formatting on a JavaScript codebase.

Notably, I’ve opted to make use of the stage_fixed directive too, which

means that if any of these lefthook commands result in changes to the

files I’ve staged, those changes will silently and automatically be

included in my commit as well. This is a choice you may want to consider;

I personally think it’s useful because I don’t ever think about formatting.

Others may prefer to have their IDE automatically run formatting and

linting commands upon save, and still others prefer not to do either,

instead to forcing the user to create a second commit with any fixes.

Noticeably absent from my lefthook.yml file is any invocation of

mypy, a static type checker. Static type checking is similar to linting,

but it goes even further. Sometimes there are instances where

you may not care when static type checking fails. Python is a dynamic

language, meaning that variables are not strictly typed. Python also

has a robust system of type hints allowing you to more explicitly

designate how types flow between function and method calls. But at the

end of the day, even type hints are still hints, meaning nothing in

the Python executable is going to actually enforce them. mypy helps

verify that when type hints are specified, they are used consistently.

Hynek Schlawack, a well-known Pythonista and open source contributor,

has recommended

not to run mypy as part of your pre-commit workflows. And Shantanu

Jain, a CPython core developer and mypy maintainer, has an excellent

write-up of some of the

gotchas that come with running mypy as a pre-commit hook. By default,

mypy runs in an isolated environment, meaning it won’t have

access to your project’s virtual environment and therefore won’t be

able to fully analyze type hints when your code makes use of

dependencies. Additionally, pre-commit hooks only pass the changed/staged

files by default, whereas mypy needs to see the entire repository to

function correctly. I’ve also noticed nesting your code under other

folders (like my backend/ directory) can also cause problems.

Instead, I recommend setting up a Justfile at the root of your repository and defining a rule for manually running static type checks. Here’s an example of a Justfile I’ve used in a past project:

PYTHON_EXECUTABLE := `cd backend && uv python find`

default:

@just --list | grep -v "^ default$"

clean:

@find . | grep -E "(/__pycache__$|\.pyc$|\.pyo$|\.mypy_cache$|\.ruff_cache$|\.pytest_cache$)" | xargs rm -rf

init-backend:

@cd backend && uv venv

@cd backend && uv sync

test-backend:

@cd backend && PYTHONPATH=$(pwd) uv run pytest .

typecheck:

@MYPYPATH=backend mypy backend --python-executable {{PYTHON_EXECUTABLE}}

The key takeaway here is the final typecheck rule which, as you can

see, changes directories into the backend/ folder and calls mypy

with specific arguments. The --python-executable argument is essential

when you have dependencies, as it allows mypy to properly utilize the

project’s virtual environment.

I’ve included three other rules here, which I’ll briefly touch on, for those not familiar with Just.

- The

defaultrule allows me to typejustfrom the terminal to print all available rules, excluding the default rule itself. - The

cleanrule quickly deletes hidden files and folders that may appear after running the code, the tests, or formatting tools. (These files are also excluded in.gitignore.) - My

init-backendrule is meant to be run after cloning the repo for the first time. - My

test-backendrule runs the unit tests, which I’ll touch on in the next section.

My examples here showcase a project where I’ve thrown

everything Python-related into a single folder. However, in many

projects, you won’t have to do this. I’m sharing this example

because keeping everything at the root of the

repository is considerably easier to deal with. In that case, you can

simply remove all the cd backend && commands from the Justfile and the

root: directives from the lefthook.yml file.

Testing and debugging

Being able to debug your code is critical. For those of you who come from a data science background, this may be a new concept. If you’re using Jupyter notebooks, the whole concept of debugging is probably incorporated into your workflow already, at least conceptually. Juptyer notebooks build in the concept of breakpoints by forcing that you run your code line by line or block by block. But when we move beyond publishing Juptyer notebooks and into developing production-grade applications, developing good debugging practices is essential. Fortunately, the tooling in the ecosystem has improved dramatically in the last couple of years.

Hand in hand with debugging comes the ability to run unit and integration

tests. Python has a couple of different frameworks for testing including

pytest and unittest, and there’s some overlap between the

two. I’ll cover the basics of setting up unit tests and

debugging in Python—without going into the moral question of using

mocks, monkey patching, or other controversial techniques. If you’re

interested in those discussions, I’ll include links to relevant articles

at the end.

When I first began coding in Python, VS Code integration was still fairly immature, and it made me want to reach for Jetbrains Pycharm instead. Pycharm has consistently maintained a very intuitive setup for debugging and testing Python, but it comes with a hefty price tag. However, in February 2024, I happened to catch an episode of the Test & Code podcast titled Python Testing in VS Code in wherein the host interviewed a Microsoft product manager and software engineer about overhaul improvements made to the Python extension for VS Code. And I agree—testing and debugging in VS Code is way better than it used to be. So much so that I no longer recommend purchasing Pycharm; VS Code (which is free) is more than sufficient.

To get started with testing, I do recommend installing pytest as a dev

dependency, using uv add pytest --dev. I suggest creating a “test”

folder in the root of your repository and prefix the files within with

test_. I also use the same prefix when naming test functions contained

within those files. Here’s an example: I might have files named

test_api.py, test_settings.py, or test_utils.py, and within those files

the test functions would look something like this:

from mymodule.utils import business_logic

def test_functionality():

result = business_logic()

assert result, "Business Logic should return True"

This simple test exercises one key aspect of unit testing: using assertions. It runs the business logic function and asserts that the result returned is truthy. Moreover, the test will fail if the business logic function raises any kind of exception. If the test fails, we will see the string message in the test output. That alone is already a massively useful tool in the toolbox for remediating and even preventing bugs, but let’s touch on a few other useful aspects about testing code in Python:

- Capturing Exception

- Fixtures

- Mocks

- Monkey Patching

- Parameterization

- Skip Conditions

Beyond testing whether certain code returns specific values,

it’s also useful to craft tests that actually expect failure. This

doesn’t mean using exceptions as control flow (which is

largely considered an anti-pattern), but rather testing that invalid

inputs will reliably raise an exception. You can utilize the raises

function from pytest, which as you’ll see here is actually a context manager:

from pytest import raises

from mymodule.utils import business_logic

def test_bad_input():

with raises(ValueError):

business_logic("Invalid input data")

As shown above, you specify an exception type (which can be a tuple of multiple types when applicable) to the raises function. Then something inside the context manager block must raise that specific exception, otherwise the whole test will fail. Useful!

However, sometimes the input for your business logic function is more complex than a simple scalar value. You might also want to re-use that same complex input across multiple test functions. While you could declare the input as a global variable, this can be problematic—particularly when you have functions that modify their own inputs. It’s better to use fixtures. Here’s an example of declaring a fixture that loads JSON from a file:

import json

import pytest

from mymodule.utils import business_logic

@pytest.fixture

def complex_input_data():

with open("test_data.json") as fh:

return json.loads(fh.read())

def test_functionality(complex_input_data):

result = business_logic(complex_input_data)

assert result, "Business Logic should return True"

As you can see, using the @pytest.fixture decorator allows us

to insert the name of our fixture function as an argument to any test

function, injecting it automatically by pytest.

A common challenge in Python applications and scripts is dealing with functions that perform business logic that can create side effects to, or dependencies on, external systems. For instance, maybe you have a function that calculates geographical distance between two points, but it needs to query a database first. Or maybe you have a function which reformats an API response and also saves it to S3.

Now, I can already hear several of my colleagues screaming in my ear that you should simply write better code when functions are serving multiple purposes, that you use proper inversion of control, and so on. And while those are valid opinions, the purpose of this post isn’t about debating best practices for architecture and testing. Rather, my goal is to empower newcomers to everything Python has to offer. And with that, I need to talk about monkey patching.

Monkey patching is a feature in Python (as well as some other dynamically-typed languages) that allows you to modify the behavior of your application at runtime. Suppose you have a function like this:

import boto3

import json

from mymodule.utils import transform_data

def business_logic(input_data, bucket_name, filename):

# manipulate the data

result_data = transform_data(input_data)

# save both forms to S3

cached_data = json.dumps({"raw": input_data, "transformed": result_data})

s3 = boto3.resource("s3")

bucket = s3.Bucket(bucket_name)

bucket.put_object(Key=filename, Body=cached_data)

return result_data

In this case, when testing the business logic function, you want to

be able to assert it performs the transformation correctly, without

your unit tests actually creating new files in S3. You might be

saying, “why test business_logic at all? Just test transform_data!”

And in this overly simplified example, your instincts would be right.

But let’s suspend disbelief for a minute.

If we want to test the business logic function and avoid writing

anything to S3, we can use monkey patching to dynamically swap the

call to boto3.resource() with something else. Sometimes that can

be a mock object, like this:

from unittest.mock import patch, MagicMock

from mymodule.utils import business_logic

@patch("mymodule.utils.boto3")

def test_functionality_without_side_effects(mocked_boto3, complex_input_data):

mocked_s3 = MagicMock()

mocked_boto3.resource.return_value = mocked_s3

mocked_bucket = MagicMock()

mocked_s3.Bucket.return_value = mocked_bucket

result = business_logic(complex_input_data, BUCKET_NAME, FILENAME)

assert result.get("transformations") == 1, "Transformations key should be present"

mocked_bucket.put_object.assert_called_once()

Let’s dive into what’s happening here. I specified the patch decorator

with the string mymodule.utils.boto3. Notice I didn’t directly import

that path within the test script, but I did import the first part of it:

mymodule.utils. Pytest is then smart enough

to figure out that from within that package, I further import boto3.

The monkey patching happens right at that nested import statement,

so instead of actually importing the real boto3 library, it returns a mock

object any time I reference boto3 within that file. And that’s useful!

Now, based on my function definition from above, I do call

s3 = boto3.resource("s3"). And here’s the beauty of mock

objects in Python: any method or property of a mock object will also

return another mock object. You can even override certain behaviors like

return_value and side_effect of mock objects. Back to my test

function, I declare the return value of the resource() function

on boto3 should be another mock object, which I’ve named mocked_s3.

Then I specify the return value of its Bucket property should be

another mock object named mocked_bucket. I don’t have to declare

additional mock objects explicitly like this; I could have used something like:

mocked_boto3.resource.return_value.Bucket.return_value.put_object.assert_called_once()

But I’m not a fan of extra-long lines like this, so when chaining calls

I find it’s cleaner and more readable to declare multiple mock objects

as I’ve done above. So, if you’re going to use mocks, I recommend this

approach. Lastly, setting the return_value property allows you to

return a fixed value, whereas setting the side_effect property to a

function allows you to return data conditional on the input.

Next, pytest also offers a parameterize decorator that lets you specify multiple inputs for a single test function. I grabbed this example straight from the pytest documentation to illustrate:

import pytest

@pytest.mark.parametrize("test_input,expected", [("3+5", 8), ("2+4", 6), ("6*9", 42)])

def test_eval(test_input, expected):

assert eval(test_input) == expected

There may be cases where you want to skip running tests

unless certain conditions are met. I’ve used the skipif decorator to

check whether a specific environment variable was present in the case of

integration tests, like this:

import os

import pytest

from mymodule.utils import business_logic

@pytest.mark.skipif(os.getenv("NEEDED_ENV_VAR") is None, "NEEDED_ENV_VAR was not set")

def test_integration():

assert business_logic()

Finally, if you’re using VS Code, the built-in testing integrations are really nice to work with. Get started by clicking the Testing icon on the left menu.

Click the blue button to Configure Tests, and then choose

the pytest framework. Next, you’ll need to choose the directory your

project is in. In most cases this will be the root directory (.),

unless you’re like me and you segment projects into a “backend” folder.

If you are like me, you may also need to specify your Python interpreter

by clicking on the version of Python at the bottom right of the screen,

clicking “Enter interpreter path…”, and then typing out something like

backend/.venv/bin/python. If you’re doing everything in the root of

your repository, this will not be necessary, as VS Code likely

auto-detected your virtual environment already.

Voila! The tests should appear on the left pane along with Run and Debug buttons to execute them. Similarly, another helpful tool in being able to run your code directly inside VS Code while utilizing breakpoints. To get started, you’ll want to click the Run and Debug icon on the left menu:

The first time you visit this tab, it will display a message that says, “To customize Run and Debug create a launch.json file.” Simply click the link to create a launch.json file, which will bring up a command palette prompt. Choose “Python Debugger” as the first option, and then you’re presented with a list of different templates. I usually pick “Python File” or “Python File with Arguments” to start, but you can add as many templates as you like. It will create a file that looks something like this:

{

"version": "0.2.0",

"configurations": [

{

"name": "Python Debugger: Current File",

"type": "debugpy",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal"

}

]

}

Back in the Run and Debug tab, you should now see a dropdown menu on top (with your “Python Debugger” configuration selected by default), with a small, green play button to the right. Click this button to launch Python in debug mode. But be aware that if you’re using the default “Current File” configuration, make sure you have your main.py file open and in-focus, and not your newly created launch.json file. Set breakpoints in your code by clicking the red dots just to the left of line numbers within your codebase, and now you’re cooking with gas!

My last bit on debugging is obviously uncontroversial, but my earlier section on utilizing mocks can be divisive. A lot of seasoned developers actively discourage the use of mocks, or refine it to specific types of mocks (e.g. stubs and fakes). So if you’re curious, here’s some recommended reading:

- Don’t Mock What You Don’t Own by Hynek Schlawack

- Mock Hell by Edwin Jung

- Mocks Aren’t Stubs by Martin Fowler

- Fast tests for slow services: why you should use verified fakes by Itamar Turner-Trauring

- Stop Mocking, Start Testing by Augie Fackler & Nathaniel Manista

Some of the takeaways from those articles have to do with using too many mock objects, and then if you refactor your codebase later, it becomes super painful to find all of the mocks. They also recommend writing your tests to an interface (aka public API) rather than testing specific implementation details. Ask yourself the question, “How much could the implementation change, without having to change our tests?” And finally, several experts advise almost exclusively using fakes instead of mocks and recommend treating mocks as a tool of last resort.

The larger morals of mocking and testing are out of scope for this article, but if you have questions about any of those points, I encourage you to peruse through those five links for more understanding.

Conclusion

Python is a wildly powerful ecosystem that can seem overwhelming at first. Python has become the lingua franca of data science and although it has plenty of detractors, it isn’t going anywhere any time soon. So to briefly summarize all of my main points:

- Don’t use relative imports.

- Install uv and play around with its myriad features, both for managing dependencies and publishing packages.

- Windows users: install WSL2 before installing anything else.

- Configure loggers to output things in JSON format, fully

utilizing the

extraargument. - Search the Snyk Open Source Advisor when looking for existing packages.

- Use ruff for formatting and lefthook for automation, but make sure to handle mypy carefully.

- Use the pytest integration in VS Code to better test and debug your code.

Hopefully this article illuminated some areas of development you were unsure about, equipped you with powerful new tools, and left you feeling empowered to get started. And let me know in the comments if you disagree with any of my recommendations or have better ideas!